Wallace Stevens had an immense insight into the way that we write the world. We don't just read it, we don't just see it, we don't just take it in. In "An Ordinary Evening in New Haven," he talks about the dialogue between what he calls the Naked Alpha and the Hierophant Omega, the beginning, the raw stuff of reality, and what we make of it. He also said “reality is an activity of the most august imagination.”

Our job is to imagine a better future, because if we can imagine it, we can create it. But it starts with that imagination. The future that we can imagine shouldn't be a dystopian vision of robots that are wiping us out, of climate change that is going to destroy our society. It should be a vision of how we will rise to the challenges that we face in the next century, that we will build an enduring civilization, and that we will build a world that is better for our children and grandchildren and great-grandchildren. It should be a vision that we will become one of those long-lasting species rather than a flash in the pan that wipes itself out because of its lack of foresight.

We are at a critical moment in human history. In the small, we are at a critical moment in our economy, where we have to make it work better for everyone, not just for a select few. But in the large, we have to make it better in the way that we deal with long-term challenges and long-term problems.

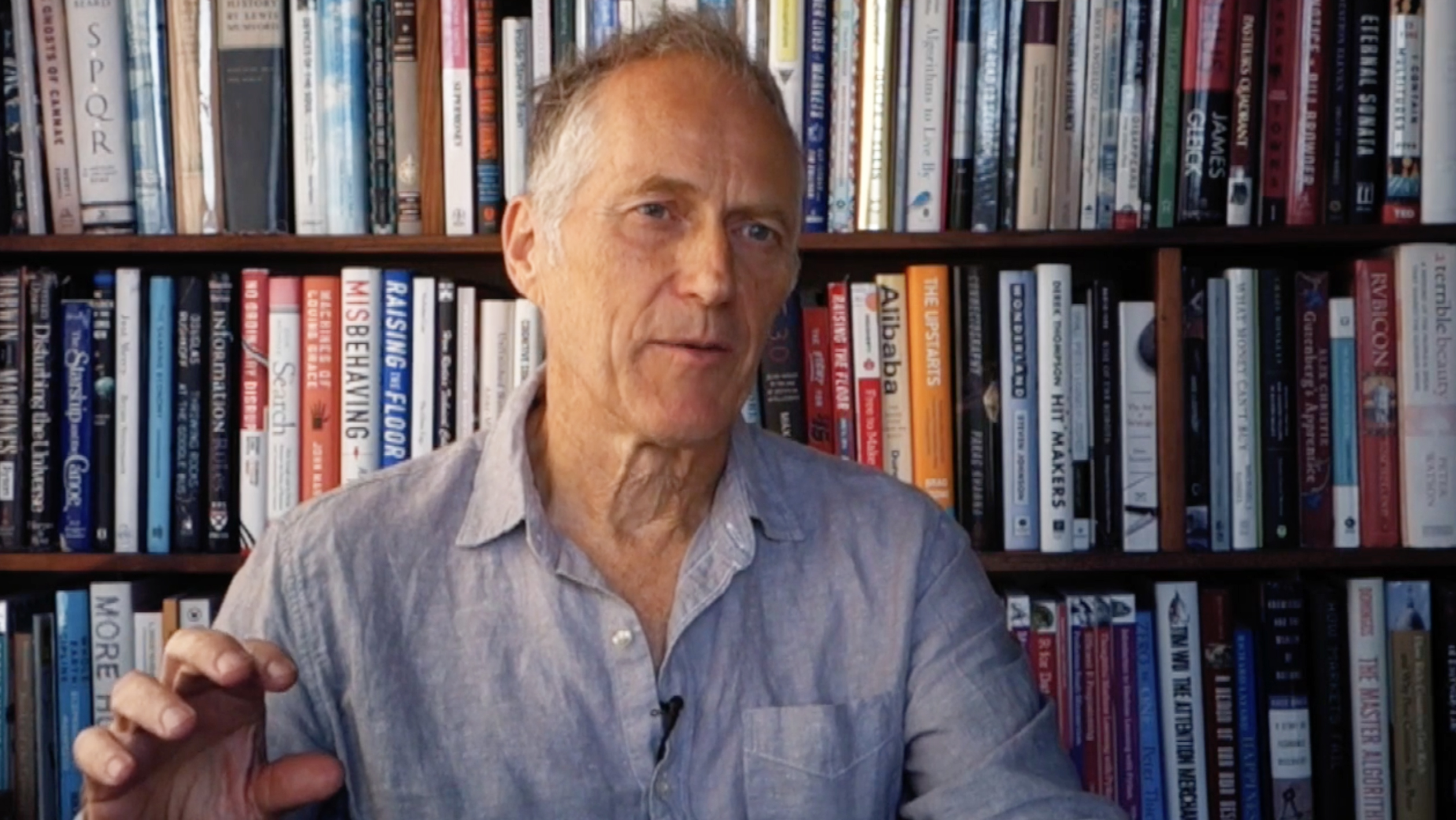

TIM O'REILLY is the founder and CEO of O'Reilly Media, Inc., and the author of WTF?: What’s the Future and Why It’s Up to Us. Tim O'Reilly's Edge Bio page