A CHARACTERISTIC DIFFERENCE

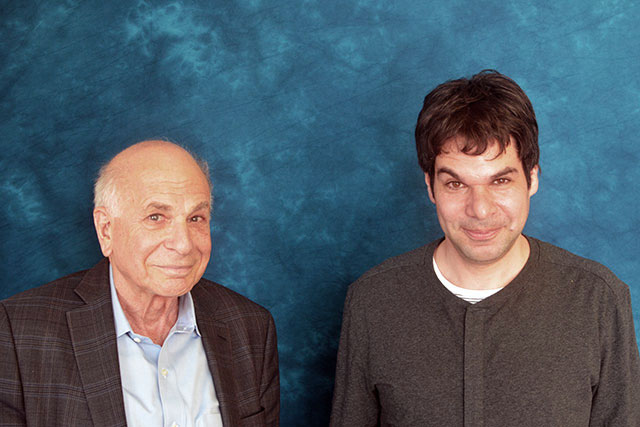

KAHNEMAN: Let's begin with an obvious question. What is experimental philosophy?

KNOBE: Experimental philosophy is this relatively new field at the border of philosophy and psychology. It's a group of people who are doing experiments of much the same kind you would see in psychology, but are informed by the much older intellectual tradition of philosophy. It can be seen as analogous, on a certain level, to some of the work that you've done at the border of psychology and economics, which uses the normal tools of psychological experiment to illuminate issues that would be of interest to economists.

Experimental philosophy is a field that uses the normal approaches to running psychological experiments to run experiments that are in some ways informed by these intellectual frameworks that come out of the world of philosophy.

KAHNEMAN: I read the review that you were the senior author of in Annual Review of Psychology in which you dealt with four topics. It was all very summarized, and I don't pretend that I understood it all. I was struck by the fact that you run psychological experiments and you explain the results. There is something that sounds like a psychological theory, and yet, there was a characteristic difference, which I was trying to get my fingers on.

There is a difference between the kinds of explanations that you guys seem to produce and the kinds of explanations that I would produce. I found myself with an alternative view of every one of the four topics. I was wondering if we could go into depth on that. Is there a difference? Is there a constraint? Is it because you are philosophers? Do you do things differently when you do psychology?

KNOBE: I doubt it would be helpful to think about it at that abstract level. It would be more helpful to think about one of the individual things.

KAHNEMAN: I'll give you two examples of the kind of thing that made me curious. The first one is on your earlier work on the role of moral reasoning in intentionality. This is something we talked about years ago. There seems to be an alternative story, which is not the one you discuss. First, describe the phenomenon, because otherwise nobody will know what we're talking about.

KNOBE: The key question at first might seem straightforward: How do people understand whether you do something intentionally or unintentionally—on purpose or not on purpose? At first it might seem like this question doesn't have anything to do with morality; it just has to do with what someone's mental states are, and how those states are related to people's actions. The surprising result at which we arrived is that people's moral judgments—judgments about whether something is morally good or morally bad—seem to impact their judgments about whether you did it intentionally.

An example that a lot of people may have already heard of involves the vice president and the chairman of the board. The vice president comes to the chairman of the board and says, "We've got this new policy; it's going to make huge amounts of money for our company, but it's also going to harm the environment." The chairman of the board says, "Look, I don't care at all about that. All I care about is making as much money as possible. Let's implement the policy." They implement the policy, and sure enough, it ends up harming the environment. Then participants are asked if the chairman of the board harmed the environment intentionally. Most people say yes. If you ask them why, they say that he knew he was going to harm the environment and he did it anyway. It's just a matter of which mental state he had.

We had the thought that maybe something more is going on here. In particular, maybe what's going on is that people say it's intentional because they think harming the environment is bad. You can see that there might be something to that if you just take the word "harm" and switch it to "help," but you leave everything else the same.

Suppose the chairman of the board gets this visit from the vice president, and the vice president says, "We've got this new policy; it's going to make huge amounts of money for our company, and it's also going to help the environment." The chairman of the board says, "Look, I know it's going to help the environment, but I don't care at all about that. All I care about is making as much money as possible." So they implement the policy, and sure enough, it helps the environment. In that case, participants overwhelmingly say that he helped the environment unintentionally. The only difference is between harm and help. Somehow, it seems like the difference between being morally bad and morally good is affecting your intuition about whether he did it intentionally or not.

KAHNEMAN: Can you give a sense of how you would explain that?

KNOBE: My explanation draws on some of your early work from the '80s, from the idea of norm theory. The idea is to consider the actual state that the chairman was in. His actual state was that he just didn't care at all. He was completely indifferent. You can think of that state as being the midpoint on a continuum.

On one hand, you can imagine someone who was trying as hard as he could to produce a goal, either to harm the environment or to help the environment. On the other hand, you can imagine someone who was trying as hard as he could to not do it, to avoid harming or helping the environment, but ended up harming or helping the environment nonetheless. The actual state is intermediate.

If we imagine what it is to do something intentionally, broadly speaking, it's to be pretty far on the goal-seeking end of the continuum. The further you are that way, the more intentional it is. The further you are in the opposite direction, the more unintentional. When you see someone in a particular state, what do you compare that to? In the case where he harms the environment, the first thing you compare it to is the state of someone who's trying to avoid harming the environment. When you compare it to that, he's pretty willing to harm the environment.

Now, in the case where he helps the environment, the first counterfactual you think of is the counterfactual in which he's actively trying to help the environment. Compared to that, he seems pretty not into helping the environment. This intermediate position—the position that's neither here nor there—in one case is seen as surprisingly willing, compared to what you would counterfactually consider that case, and in the other case, surprisingly reluctant, compared to the counterfactual that you would consider in that case. That's the explanation that I offer.

KAHNEMAN: By the way, the explanation I thought wasn't mentioned in the Annual Review piece. What you are saying is that moral judgment preexists in some way and infuses regular judgment as if those were two different categories. My sense was that the concept of norm is quite interesting because it does both things: it speaks of normal and it speaks of normative. Now we're linking to what you're saying. It is more abnormal to do bad things than to do good things.

KNOBE: That's exactly right. It's a mistake to think about it in the way that some researchers maybe have thought about it, that the way the human mind works is that there's this gap between our moral judgments and our factual judgments, and then there's a causal relation between them. Rather, we have a way of thinking about the world that doesn't involve that kind of distinction between prescriptive and statistical.

One way we've tried to test this is by using this notion of the normal that you addressed. In a series of studies, we asked people about the normal amount of various things. What's the normal amount of TV to watch in a day? What's the normal amount of drinks for a fraternity member to have on a weekend? What's the normal amount of students in a middle school to be bullied? Then for each of those things, we asked another group of people what the average amount of those things is. What's the average amount of TV to watch in a day? What's the average amount of drinks for a fraternity member to have on a weekend? Then we asked a third group what the ideal was. What's the ideal amount of TV to watch in a day? What's the ideal amount of drinks to have on a weekend?

Across all of these different items, people tend to think that the normal is intermediate between the average and the ideal. Exactly like you suggested. It seems like we have this notion of the normal that's not the notion of the average, or the notion of the ideal; it's a mixture.

KAHNEMAN: I agree. I've been interested in where that notion of the normal comes from. But the way that you talk about it struck me as interesting. You talk about it as if there were an expectation that pure cognitive judgment would be independent of moral judgment, and lo and behold, it's not independent. But why have that expectation? If we are agreeing that it's all about normality, which comes from relative frequency—some of it is abnormal because it's immoral, and some of it is abnormal just because it's rare, but there is no difference. So here you can have the whole theory that doesn't mention morality, except at the very end—the mere fact that immorality is abnormal. That's not quite the way you write it up. I'm trying to distinguish what you do from the kind of psychology that I do.

KNOBE: I feel like our disagreement seems like a funny one in that it seems like we both agree about what the right answer is, but we're just disagreeing about whether it's surprising. Maybe the sense in which we found it surprising is against the background of a certain view that a lot of people have about how the mind works.

A lot of people have this view that there's a certain part of the mind in charge of figuring out how things work, and then there are other representations or cognitive processes in charge of thinking about how things ought to be. Then there are causal relations between those. That's what we really wanted to attack. It seems as though the way the human mind works is that there's no clear distinction between the processes in charge of figuring out how things are and the processes of thinking about how they ought to be. There are these processes that are a hybrid of those, a mix or hodgepodge.

KAHNEMAN: Now it's becoming clearer what's happening here. There are two things. One is that your question and your basic concepts are borrowed from philosophy. You are talking about moral judgments infusing cognition. Basically, there is a psychological assumption, a mechanism, where moral judgment and cognitive judgment are still separate, but you're showing that they merge. Whereas, as a psychologist, I would immediately question why you assume that they are separate in the first place. What makes your question interesting is the philosophical background that distinguishes sharply between moral judgment—between is and ought.

KNOBE: You're right that it's against the backdrop of this assumption—which we both think is a mistaken assumption—that this result becomes especially interesting. Even though I completely agree with you about what the right answer is, a lot of researchers in the field of theory of mind—people who are just trying to understand how people ordinarily ascribe mental states—have this view that a good way to understand how theory of mind works, how people ordinarily make sense of each other's minds, is that it's something like a scientific theory.

KAHNEMAN: Let's look at another example. Can you describe the line of research on free will and the story that you tell about free will?

KNOBE: Another fundamental question that philosophers have often wondered about is about the relationship between free will and determinism. Suppose that everything we're doing is causally determined. Everything we do is caused by our own mental states, which are in turn caused by previous things back to some facts about our genes and our environment. Could we still be free? Philosophers have been wondering about this for thousands of years.

Incompatibilism says freedom and determinism are incompatible. If everything you do is causally determined, nothing you do could possibly be done of your free will. Compatibilism, this other view, says that these two things are compatible with each other. If everything you do is completely determined, say by your genes and your environment, as long as it was determined in the right way, you could still be doing something that was completely free. How can this be explained? It seems, intuitively, an obvious hypothesis is that there are different things within our own minds that are pulling us in different directions, drawing us towards incompatibilism, but also toward compatibilism.

Maybe there's a difference between what happens when you think about the question in the abstract and when you think about the question in the concrete. If in the abstract you're thinking, here's the idea of determinism, here's the idea of freedom, are they compatible? Then people think no. But if you think of one individual person who did something morally bad, even if you think that person is completely determined, you're still going to be drawn to the idea that that person is morally responsible for what he did.

In a series of studies, we tried to vary this dimension of abstractness versus concreteness. We told people about a universe in which everything was completely determined by things that happened before. In the abstract condition, we asked if anyone in this universe could ever be morally responsible for anything they did. People overwhelmingly tended to say no one could be morally responsible for anything. In the concrete condition, we told them to imagine one person who does one morally bad thing in this universe and asked if that person was morally responsible for what he did. Then people tended to say yes, which is obviously a contradiction with the claim that no one can ever be morally responsible.

Our thought is that it's the tension between these two psychological processes—the processes involved in this abstract cognition and the concrete cognition—that are giving rise to the problem of free will.

KAHNEMAN: Here is a different psychological take on that. When you ask people a question, they don't necessarily answer the question that you ask them. They could very well be answering a neighboring question. When you're asking about the question of free will, about a concrete example such as a crime, people might answer it in terms of how angry they feel: whether they blame the person, if they're angry with the person, if they would like to harm the person.

This is a question that perhaps somebody has never gotten before or has really not thought deeply about, so there is no obvious answer. Some of us think that when you ask people a difficult question, they don't answer it, they answer a different question that's simpler. On the issue of free will, when it is an emotional issue, that is, when you're angry, when there is a crime being committed—what you call emotional salience—there is an alternative view because the answer to the question of whether a person is free or responsible for his/her actions is determined because you're angry. You would not be angry if that person were not responsible. You infer that you think that person is responsible from the fact that you are angry.

KNOBE: First of all, I don't think that that is the right hypothesis, and I'll provide some evidence for that in a second. Suppose we assume that that is the right hypothesis—attribute substitution. Do you think this attribute substitution could plug into the basic story that I was offering originally? I was asking why this problem has been a problem for thousands of years, and suggesting that people are torn in these different directions depending on whether they think abstractly or concretely. If you now think the reason they give different answers in the abstract and the concrete is because of attribute substitution, do you think that could plug into this basic picture that the reason we've been worried about this so long and can't agree about it is because, in the concrete, we use this heuristic?

KAHNEMAN: This is not the way that you explain it. We'll talk about the details in a minute, but it is very much the same as the issue that we discussed a few minutes ago.

The concepts that you're bringing in are the concepts of philosophy. You're bringing them unchanged, with their baggage. Then, you find, you observe, you discover, that there is some relationship between these concepts that shouldn't be there, or that there is a variable that should not be influential but is. I do not recall that there is the possibility anywhere that people are not answering the question that you ask them. If you did introduce that possibility, wouldn't it make the philosophy look less interesting?

KNOBE: I think it wouldn't. But I also think that that possibility is false. The alternative that you suggest is that maybe people are being driven by anger. They have this emotional reaction, and instead of answering the question that's being posed—did he have free will?—they're answering this other question, is this outrageous?

There are two pieces of evidence against that idea that anger plays any role in it. One is if you look at participants who have a psychological deficit, a deficit in emotional reaction due to frontotemporal dementia, or FTD. These are people who experience less emotion than you or I would, so they have this deficit in the capacity for emotional response. If it was due to emotion, you'd expect the effect that I described to be moderated by this difference between FTD patients and us. But in fact, FTD patients showed just as much the tendency to say that these people are morally responsible as participants without FTD, indicating that whatever it is that makes us do it, it's not the fact that we feel anger at this.

KAHNEMAN: That brings back norm theory—normality and abnormality. When something truly unusual happens, we look for responsibility. There is more to explain when the event is extreme. When the event is extreme, the explanation that attributes causal efficacy to the agent is much more attractive when the action you're explaining is unusual and abnormal than when it is normal and customary. I was toying with two psychological interpretations.

KNOBE: That second one was possible, too, but tragically, I think it's also false. Subsequent studies have checked what happens when people are asked about someone who just decides to go jogging. Imagine you're in a completely deterministic universe, and you decide to go for a jog. Did you do that of your own free will? This is the least abnormal action.

People again tend to say you do that of your own free will. It feels like it's something about concreteness, per se, not about immoral actions or emotion. It's nothing about the abstract question, but rather thinking about a real individual human being doing something.

KAHNEMAN: I can see that. Let me think about it for moment. [21:40-22.25: Kahneman thinking] Here is another way in which a psychological analysis might go. When I ask if a person is free, there are so many substitutes to that question.

The first and most obvious substitute is: Does that person feel free? It's a very different question, and the answer to it is much less interesting. It is true that that interpretation doesn't arise when you are asking the question in the abstract. When you're asking about concrete actions, that interpretation of the original question—is the person free?—in terms of does the person feel free, is quite an attractive interpretation. But that could be false as well.

KNOBE: That's really helpful. I don't know if you'll think that this new data point helps to illuminate the issue, but there's been a nice series of studies on this topic by Dylan Murray and Eddy Nahmias. They took the cases that we used and asked people a different question. They asked: Does the action that the person performed depend in any way on what the person wanted, thought, or decided? You might think that if everything is deterministic, it still does depend on that. It depends on that because it goes in a deterministic chain through that very thing.

When people are given the abstract question—in this whole universe, does the thing you do depend on your beliefs, desires, and values?—people say no. In the concrete case, they tend to say yes. I have the sense that whatever difference is obtained between the abstract and the concrete could be due to attribute substitution of some kind, but not to thinking that this person is really asking whether the person feels free.

KAHNEMAN: Attribute substitution is a psychologically richer concept than this. There are those experiments which ask first how many dates you had last month, then they ask how happy you are. There is a very high correlation between the number of dates and how happy you are. When you ask the questions in reverse order, you don't find that correlation.

Now, this clearly indicates that people use their happiness and the romantic feel that you have just evoked to answer the question of how happy you are in general. They're not confused. They know the difference between happy in general and happy in the romantic area. They know the difference, but they answer one question in terms of the other without being aware of the substitution. It's not that they're conceptually confused.

Some of the questions that you ask naturally evoke alternative questions, neighboring questions. This is more likely to happen, clearly, in the concrete case than in the abstract case.

Again, what strikes me is that you don't start from the psychology of it. What I'm trying to get at is that you are starting from concepts that are drawn from philosophy, and you're using these concepts in a discourse about the psychology of intuitions. There seems to be a characteristic difference—and this is what I'm trying to draw out of you—between this way of thinking about experimental philosophy and the approach that is purely psychological.

If you started as a psychologist, you would look at these complicated, abstract questions, and you could say or at least what I'm inclined to say, that these questions are truly impossible; people must be doing something to make them intelligible to themselves to answer it. Then let's try to figure out what is the nearest that we can come to a coherent description of how people answer this question in this context and that question in the other context. That strikes me as a natural way for a psychologist to go at your questions, and it is not what you're doing. That's what makes me curious. What is the difference in the way that we approach it?

KNOBE: It's an interesting question. In general, if you look at the processes that we invoke in order to explain these questions, there are psychological processes of a general sort. It's not that we are tending to invoke special philosophical processes. Our discussion that we just had about the case of intentional action had that character. What we were suggesting is that the process that explains this result is just a general fact about how people understand norms, and how people compare what actually happened to what's normal.

Where the two of us parted ways is in thinking about what's exciting about that or what's interesting about it. I was thinking the fact that people use this process—the process that we both think that people use—is exciting or interesting against the backdrop of this much larger philosophical framework. It didn't seem like we disagreed about why people show this pattern of responses. I felt—in a way that maybe you didn't—that we can think about what's interesting about that by thinking about the fact that people use this psychological process against the backdrop of this philosophical framework that a lot of people have.

KAHNEMAN: We're at the nub of the question. You come from philosophy, so there are certain things that are of interest to you. You want to convey two things at once: that the question is exciting, and that you have something new to say about it. It is true that I, as a psychologist, would come to the same question and conclude that it's an impossible question. Those are two impossible questions, and I certainly would not expect people to answer them in any way that is coherent.

My first assumption, coming to it as a psychologist, is that there is no coherence. You agree with me that there is no coherence. What makes it exciting from the point of view of philosophy is that there is no coherence. Whereas, as a psychologist, I take it for granted that there is no coherence, so it's less exciting. That could be one of the differences.

KNOBE: That's really helpful. The thing we showed is not just that it is incoherent but along which dimension it is incoherent. It seems like there was evidence already that there's something pulling us towards one side and something pulling us to the other side, and we want to know which thing is pulling us towards one side or the other. We suggested that it's this difference between abstract thinking and concrete thinking. I agree that part of the reason why you might care about that is because you might care about the question of whether human beings have free will.

If you find yourself untroubled by those questions, so you think free will...

KAHNEMAN: I could be deeply troubled by this question if I could imagine an answer to it. There is a set of questions to which I can imagine an answer. When do people feel free? That's a very exciting psychological question. When do people think that other people are free? That's a different question, also quite exciting. But those are psychological questions, and I would not assume any coherence.

KNOBE: You're saying, of course people's own intuitions contradict each other, against a certain backdrop. Why would you have thought otherwise?

KAHNEMAN: If you're for a philosopher, you would never say, of course they contradict each other.

KNOBE: It's interesting that you bring up that specific point. I find that there is a real tradition within philosophy of thinking that there are these tensions that we seem to experience, this puzzlement, but that if we could just see the situation clearly, if we could understand clearly what's going on, then we'd see that everything coheres beautifully with everything else.

KAHNEMAN: Absolutely.

KNOBE: Then the idea that it's clarity that we'll get out of this is the idea that we are fighting against. In this case, it seems like the more you understand clearly what's going on, the more you see how genuinely puzzling it is that there is something pulling in one direction and something else pulling in another direction, and it's not as though those two things can be reconciled.

KAHNEMAN: This is truly an interesting point. The basic assumption is that there is some underlying coherence, and the task of the philosopher is to discover it. That really is the theme of conversation, if you think about it. As a psychologist, I just don't make that assumption. It's obvious to me, in every context including statistical intuitions, moral intuitions, physical intuitions, they're not coherent. We have all sorts of intuitions and if you try to connect them logically, they're not consistent. It's interesting, but it's a very general effect.

KNOBE: I wonder if you would accept the following way of thinking about it. When people are thinking about these questions psychologically, I almost feel like there are these two different forces at work in their way of thinking about them. One is just the data. People are trying to make sense of the data. As they look at the data, they tend to be drawn towards this view that best fits with the data that there is something incoherent within people. To take our other example, there's no clear division between people's prescriptive judgments and their descriptive judgments; these things are mixed in some way. The reason they're drawn to those views is because that's the best explanation of the available evidence.

There's this other force that plays a role in people's way of thinking about these questions, which isn't a force specifically among people in philosophy departments or psychology departments; it's something that takes hold of people when they start to think at a more abstract level about how this stuff works. When people think on this abstract level, they are drawn to the idea, "There's got to be this fundamental distinction between how people think about the way the world is and how people think about morality." Or they think, "There's got to be some underlying competence that's perfectly coherent, that is somehow shrouded over in some ways by these distorting factors."

Then there's a role that you can play as a theorist, of taking the thing that seems to be given by the evidence—and that people would create a model that fits at the level of this evidence—and reasserting it at the level of this abstract theory, saying "We should believe this thing. The thing that is given by the evidence is the thing we should believe. We shouldn't abandon it when we start to think more abstractly about these questions."

It seems like there's something, even among psychologists, that draws people toward thinking about things in this incorrect way when you start to think about them more abstractly, as opposed to trying to develop a model that predicts the data that you have available. Maybe you don't feel that force within you.

KAHNEMAN: No. I'm not sure I do. In my own career, I've made probably a lot of mileage out of the fact that people's intuitions are not coherent. It's true that the response to the work that Amos Tversky and I did, the surprise there was because we were showing time and time again that intuitions are not coherent. Now I have internalized that, and it's not surprising to me anymore. It is not surprising to a psychologist.

What is very interesting about experimental philosophy, as against experimental psychology, is that when you start from the assumption of coherence, the discovery of incoherence, you phrase it still in the terms in which it should have been coherent and it isn't. If you think that abstract questions and concrete questions are completely different, that is, when people think about ensembles, categories, or abstractions, they are doing something entirely different, then there is less puzzlement. We're asking a different question.

KNOBE: It might be instructive to think about how people within psychology most often reply to the kind of work that we are doing in experimental philosophy. People raise alternative explanations just the way that you have raised them, but the alternatives that people are normally worried about are different from the ones you're worried about. The worries you have are almost the converse of the worries that people usually have.

The usual worry, the endless replies that I've been trying to beat back in my own work, are that deep down in people's minds is this thing that's beautifully coherent and perfectly scientific, and then there's just some annoying distorting force that's getting in the way of them expressing it correctly. If only we could come up with a way of getting rid of that distorting force, then the inner, coherent, perfectly scientific understanding that they had all along would be able to shine forth. People who say this don't just say it in the abstract in the way that I just said. They propose specific, testable things that would be a distorting force.

KAHNEMAN: I agree entirely that that intuition and the search for coherence is not restricted to philosophers. There's something to this reaction you're getting from psychologists who would be flattered that philosophers are doing psychology—"Oh, yeah, you're finding our work useful, and our approach to the world useful." This is a compliment to us, and it colors our reaction to the work.

I have another question of the same kind, which has to do with consciousness. Just describe the consciousness versus knowledge distinction that you advance.

KNOBE: We're interested in a question about a distinction between two different types of psychological states. On the one hand, there are states like believing in something, wanting something, intending something. On the other hand, there are states like experiencing pain, feeling happy, feeling upset. Sometimes philosophers say that those second kinds of states have this quality of phenomenal consciousness. What do you have to have in order to have those states, to be able to feel something?

One view you might have is that you have to be able to respond in a certain way to your world; that's what makes you conscious. Another way is what you're like physically. It's that idea that we thought might be onto something in terms of explaining people's intuitions, that there's something about our embodiment—the fact that we have bodies—that makes people think we're capable of having those states.

We tested it in a number of different ways. For example, think about the difference between a person and a corporation. Think about the difference between Bill Gates and Microsoft. You can say, "Bill Gates believes that profits will increase." You could also say something like, "Bill Gates is feeling depressed." You could say, "Bill Gates intends to release new products." You could also say something like, "Bill Gates is experiencing true joy."

If you ask about Microsoft, only one of those as possible. You could say, "Microsoft intends to release a new product"; that's fine. "Microsoft believes profits will increase"; that's also fine. "Microsoft is feeling depressed"; that's not good. "Microsoft is experiencing great joy"; that's not good. Corporations seem to have all of those states that don't seem to require a phenomenal consciousness, but they lack all the ones that do require a phenomenal consciousness. Across many different comparisons, not just between people and corporations, it seems like it's this embodiment that makes you see it as having those kinds of states.

KAHNEMAN: About corporations, we have some rules about how we attribute states of mind to corporations. Did the CIA know something? When you unpack this, it's not the CIA knowing, it's some people within the CIA. Who are the people within the CIA who have to know something before the CIA can be said to know that thing? That's a set of interesting questions, but they're more about the application of states of mind to corporations than about the deep philosophical questions. I was more interested in robots.

KNOBE: You get the same exact effects when you turn to robots. You have a robot that's just like you—Robo-Danny. On all psychological tests, the robot would answer exactly the same thing that you would answer. If we asked the robot if it experienced true love, the robot would answer and give the exact same answer that you gave. Now, if we asked people if that robot knows a lot about prospect theory, they would say absolutely. If we asked people if the robot thinks it's in the midst of a videotaped interview—absolutely. But then if you asked if the robot can feel happy, people tend to say no.

The robot acts just like you do, but people would say it doesn't feel anything. The key difference now between the robot and you is entirely a physical one. The robot acts just like you, but it's made of metal.

KAHNEMAN: Here, I differ. I have an alternative hypothesis, which is eminently testable. You now have robots that have facial expressions. If you had a robot that smiles appropriately, cries appropriately, and expresses emotions appropriately, people would attribute emotions to that robot, just as they do to other people. We attribute emotion to animals without asking questions about whether they feel it or not. It's just whether the expression is compelling.

If you had a robot who would answer some questions with a shaky voice, or would express it with a tense voice, or with an angry voice, my view is that people would very easily be convinced that that robot has feelings. The attribution of feelings derives from some physical cues that we get about emotions, which we have learned from our own emotions and the emotions of people around us when we're children.

KNOBE: I think almost the same thing that you said. It's not whether you believe that this thing is biological, or whether you believe that something has a body; it's whether the thing has certain cues that trigger you on this non-conscious level to think of it biologically. This table, we don't think of biologically. A human being, we do think of biologically. It's not just that we believe that a human being is something biological; it's that even if something wasn't biological, like this example you gave, if it moved in a certain way, if it made certain kinds of noises, it could trigger us to think of it as biological.

To test the hypothesis that it's not about your belief that the thing has a body but how much you're thinking of it as being embodied, we ran a study with human beings. We tried to manipulate the degree to which people think of human beings as having a body. The way we did this is just by showing people pictures of human beings in various states of undress. Like this, or taking their clothes off, or these pornographic pictures of people. Just as you might expect, if you look at those first kinds of states that I was talking about—the ability to reason and have self-control—the more you see someone as having a body, the less you see them as having any of those states.

With regard to these other states—the capacity to feel afraid, to feel upset, to experience pleasure, to feel happy—the more you take off your clothes, the more you're seen as having those kinds of states. It seems like there is something to what you're saying, that it's not just your knowledge of whether the other person has a body. The subliminal cues—the quick cues that you're picking up on—are not to something having emotions directly but rather to it being biological, cues to it having a body. A robot could give off cues to having a body.

KAHNEMAN: We are probably fairly close to agreement; although, the language that you use to describe the findings is a fairly different language. It's very abstract, and it derives from another field.

KNOBE: That's the right way of thinking about it. As we keep going through each of these different things, I always feel like we're supposed to be getting into a fight, but then our fight never materializes. It's because it's not at the level of the cognitive mechanism underlying each finding.

KAHNEMAN: No, we agree on those.

KNOBE: It's at this level of ...

KAHNEMAN: What's interesting. It is a difference in tastes. Clearly, it reflects our backgrounds. As a teenager I discovered—that is what drew me into psychology—that I was more interested in indignation than I was interested in ethics. When you come from that direction, you have a different mindset than when you come from ethics to indignation.

KNOBE: Let's consider an analogy in this other field. A lot of times in behavioral economics, people try to show that a certain effect is interesting because they try to show a difference from what you'd do if you're engaged in rational choice theory. But then it seems very puzzling because why would you ever think people would do that? You might think in order for something to be interesting, it should be differing from whatever priors I had given from previous research or something, not from rational choice theory. Do you think rational choice theory somehow is playing an analogous role?

KAHNEMAN: I agree. That's a beautiful analogy. I hadn't seen it as clearly before as I see it now. It's very clear that we use economics in the same way. It's a background, and then we use it as a source of null hypotheses, we draw some concepts from it. Ultimately, to pursue the analogy a little further, a term like "preference" or "belief" has a particular meaning within rational choice theory.

The correct psychological answer is that in those terms, there are no preferences and there are no beliefs because whatever states of mind we have do not fulfill the logical conditions for being preferences or being beliefs. As a psychologist, I'm sometimes there and sometimes I argue with economists, and I do exactly the same thing that you do. The analogy is an excellent one.

DANIEL KAHNEMAN: We're at the nub of the question. You come from philosophy, so there are certain things that are of interest to you. You want to convey two things at once: that the question is exciting, and that you have something new to say about it. It is true that I, as a psychologist, would come to the same question and conclude that it's an impossible question. Those are two impossible questions, and I certainly would not expect people to answer them in any way that is coherent.

DANIEL KAHNEMAN: We're at the nub of the question. You come from philosophy, so there are certain things that are of interest to you. You want to convey two things at once: that the question is exciting, and that you have something new to say about it. It is true that I, as a psychologist, would come to the same question and conclude that it's an impossible question. Those are two impossible questions, and I certainly would not expect people to answer them in any way that is coherent.